Salinity | Artify the Earth

Awards & Nominations

Salinity has received the following awards and nominations. Way to go!

The Challenge | Artify the Earth

songSAT, from bands to a band

SongSAT, From Bands to a Band

Background:

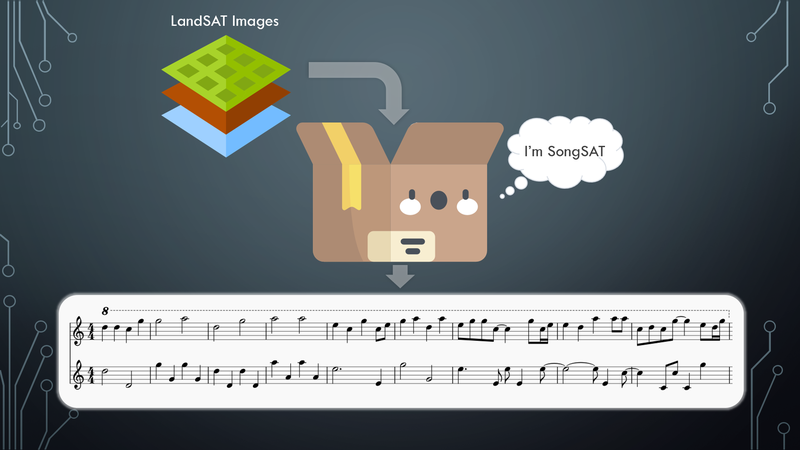

SongSAT is a tool to share the beauty of the world in different mediums, expressing the wonders of satellite imagery through audio. This allows the beauty of satellite imagery to communicate to an audience with visual impairments to enjoy the wonders of the world from above too, or to be used by musicians to aid with musical writing blocks.

Our team produced an algorithm, SongSAT, to convert four distinct geographical areas (Grasslands, forest, coastal/water areas, and mountainous areas) into songs with distinct, recognizable musical patterns that play back using MIDI. We took the MIDI files outputted and brought them into MuseScore, where we used different instruments and virtual instrument plugins to further enhance the auditory experience.

You can hear more locations at http://songsat.ca. This algorithm 100% open source, and you can view the source code and run it yourself from http://github.com/mcvittal/SongSAT

How it works:

The first thing SongSAT does is determine what the predominant land class the image is. To do this, we sample 400 points on the input image and extract the land class at these sample locations from the Global Land Cover Facility MODIS dataset (http://glcf.umd.edu/data/lc/). We also compare to see if any points fall in any mountainous regions, using a dataset provided by the United Nations (https://www.unep-wcmc.org/resources-and-data/mount...), since the MODIS dataset does not have mountains as a land cover classification. If the majority of the points fall within mountainous pixels, it returns mountainous as the land cover classification, otherwise it will return the dominant land cover class found from the MODIS dataset.

In order to have music that represents the landscape, a bit of human intervention was needed to guide the algorithm in the right direction. Due to time constraints, we were only able to create themes for four dominant land cover classes (Forest, water, mountain, and grassland), but given more time, we would be able to create themes that are representative of the remaining land cover classes (arctic, desert, and urban).

Each theme has a corresponding set of notes that it can play (The water theme uses a pentatonic scale, the mountain theme uses a combination of semitone clusters and a variation on the minor scale, and the other two use variations on the major scale), and each theme also has a set of rhythms for the two voices in the melody to use that are selected at random. SongSAT takes the modulus of each pixel value to simplify the 0-255 range of the pixel down to the number of notes in the selected scale, and then maps the new value, which is the degree of the scale, to the corresponding note in that scale. To make a pleasing accompaniment, the first note of the bar selected in the melody is made the note for the accompaniment for that bar, with the accompaniment switching octaves on each note change to add movement and variation to it. Forest however uses a sixteenth note arpeggiating baseline and mountain uses an aggressive syncopated rhythm instead of a simple octave switching to better match the mood of the area. All these values are written to a MIDI file as output. As well, to keep the melody line flowing and not disjointed, it ensures that no jumps larger than a perfect fifth occur (For example, if the interval is from C to A, it will choose to go down a major third instead of up a major sixth).

The MIDI file at the end was then brought into MuseScore, a free and open source notation software to select more appropriate instruments that fit the mood of the piece. The forest theme uses the default harp sound that ships with MuseScore, while the rest were instruments chosen from a third-party soundfont file to make the songs sound better and slightly less artificial.

Where it will go next:

If our team chooses to pursue improving SongSAT, the next thing to do would be to add the remaining land classification themes to allow for global coverage of SongSAT, and to add more rhythm options to the songs to make it feel a bit less monotonous and repetitive, and possibly add in multiple sections to the songs generated. After these key parts to it are improved, the next step after that would be to make the program more user-friendly and accessible so that anyone can install and run it, or make it into a proper web application that generates the music on the fly.

Challenges Faced:

- Selecting the scales and rhythms that would create the appropriate feel for the image being viewed

Resources:

- Python3 libraries (numpy, midiutil, PIL, matplotlib, random)

- gdal command line binaries

- MuseScore

- Nice-Key-suite (for enhanced audio)

- LANDSAT-5 TM data for data to generate audio with

SpaceApps is a NASA incubator innovation program.