TEAMMATE | Design by Nature

Team Updates

It's been months since the event reached its end, what are you doing here?

Can You Build A…Design by Nature

Design an autonomous free-flyer to inspect a spacecraft for damage from Micrometeoroid and Orbital Debris (MMOD)

TEAMMATE

Douglas Montanha Giordano, douglasmontanhagiordano@gmail.com

Juarez Corneli Filho, juarezcornelifilho@gmail.com

Liége Maldaner, liege.malda@gmail.com

Lucas Prediger, lucasprediger@hotmail.com

Luciano da Rosa, luciano-darosa@hotmail.com

Marcelo Leocaldi Coutinho, marcelo.pix@gmail.com

Marcelo Serrano Zanetti, marcelo.zanetti@ufsm.br

Introduction

Upon entering a planet's atmosphere, a spacecraft will be subjected to extreme temperatures. To protect it from damage, and the crew from injuries, an insulation layer is provided: it is called the thermal protection system (TPS). Being partially exposed, the TPS may suffer damage from collisions with debris or micrometeoroids at any moment during a space mission. Therefore it is critical for the success of any mission, including the safety of the crew, to have the TPS inspected before relying on it for a landing.

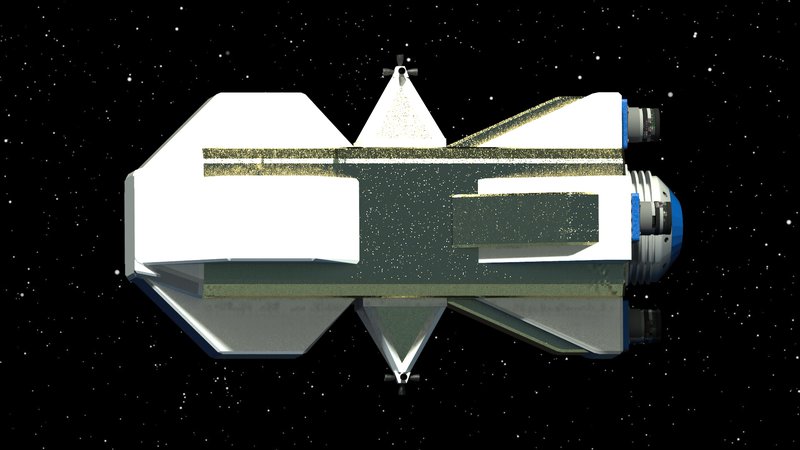

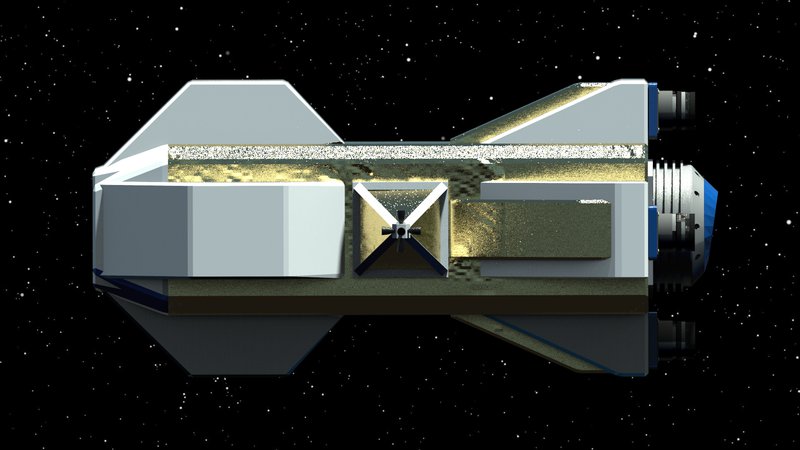

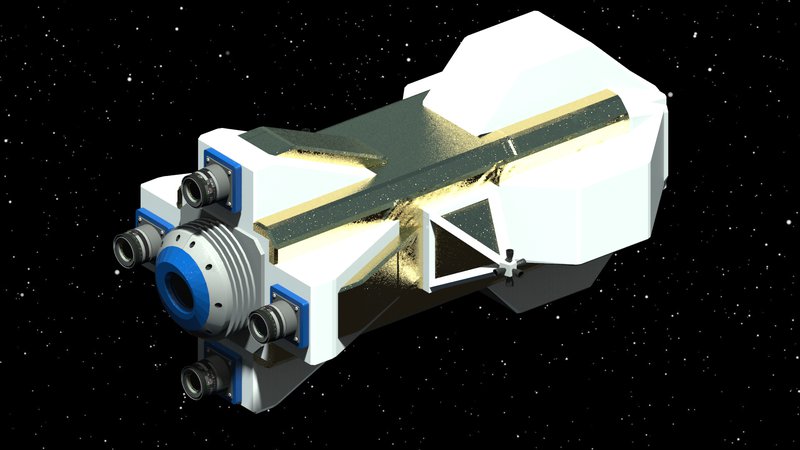

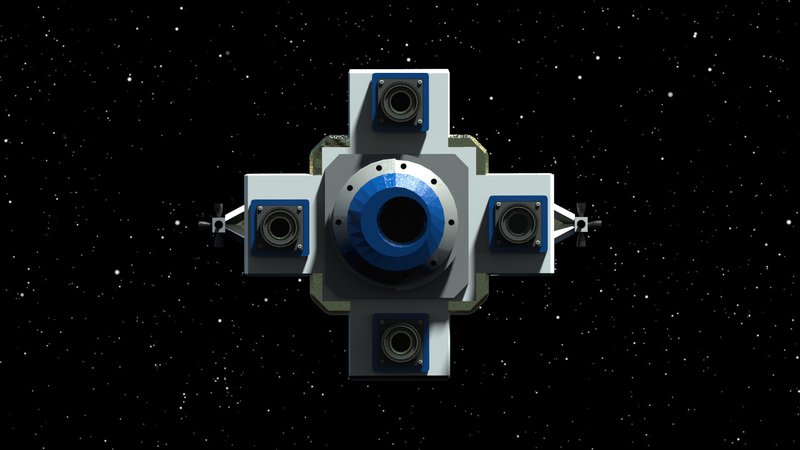

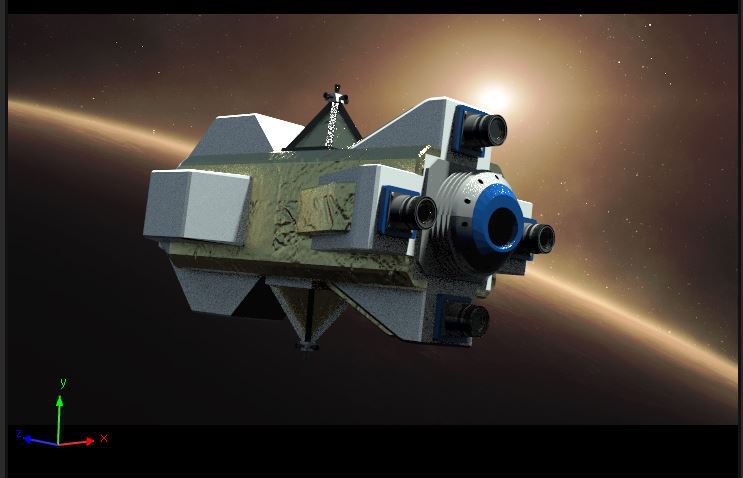

This project answers the challenge to build a Free-Flyer to inspect the entire surface of a spacecraft's TPS, prior to its landing, by detecting possible damage autonomously and without any physical connection with another spacecraft, using a design inspired by nature.

Design Goals for the Free-Flyer

This project considers an engineering design seeking to reduce the Free-Flyer’s volume and mass, using commercially available components, when possible. The components of the Free-Flyer are:

- Metallic Housing

- Multi-Spectral Sensors: Optical, Infrared, and LiDAR (Light Detection and Ranging)

- Rechargeable Batteries

- Fuel and Propulsion System

- Attitude Control System: 3-Axis Reaction Wheels

- High-Performance Computing System With GPU

- Space / Military Standard Connectors

- Operating System

- Real-time Communication and Data Transfer System

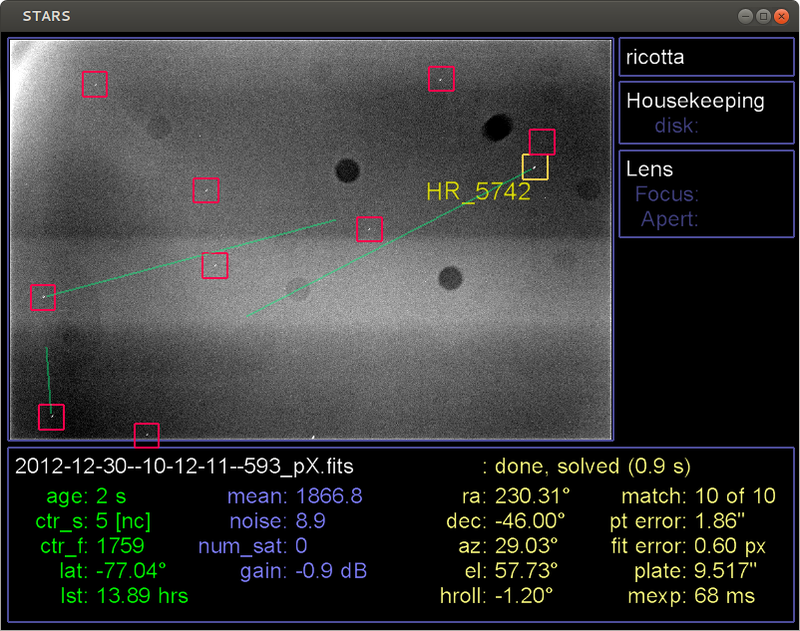

- Radio Trilateration System and Star-Tracker for Coarse Navigation

Inspection Protocol

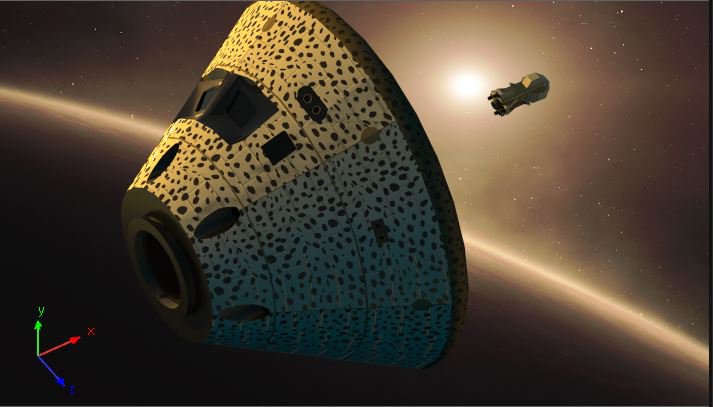

The Multi-Spectral Sensors uses the LiDAR as the main tool to identify damage to the TPS. It scans the whole surface of the Spacecraft while generating a 3D model with high precision (1 µm). Comparing this model with a reference model, produced in the laboratory under controlled conditions, it is possible to apply statistical methods to detect eventual holes and cracks in the TPS.

Therefore, the Free-Flyer will have to move around the Spacecraft to scan its TPS surface. To save on propellant, our inspection protocol does not use a helical trajectory around the Spacecraft: Newtonian mechanics states that to follow a curved trajectory the Free-Flyer will have to be accelerating all the time, spending large amounts of propellant.

Our approach requires maneuvering the Spacecraft: we establish a slow spinning speed around its longitudinal axis. The Free-Flyer will move linearly and uniformly around the Spacecraft. While the Spacecraft spins, the Free-Flyer effortlessly scans whole segments of the TPS surface. This allows the Free-Flyer to use much less propellant than performing helical movement around the Spacecraft. However, while the Spacecraft spins, its crew must remain attached to their seats in the Spacecraft to avoid accidents and injuries.

The linear movement of the Flyer is organized in “steps” used to divide the Spacecraft into a predetermined amount of sections, depending on the LiDAR sensor’s angle of detection, allowing the full sweep of the TPS surface with some overlap between sections.

Navigation

The Free-Flyer ability to self-locate is critical to the success of its mission: it needs to maneuver, and to associate a detected damage to a specific region of the TPS surface.

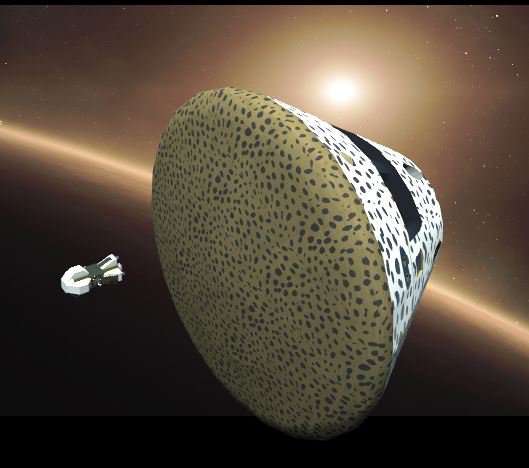

Our navigation system is nature inspired: living beings are unique, and this is especially true for an animal such as the cheetah, with no two cheetahs having the same spots.

Inspired by this idea, we devised a series of unique patterns to cover the whole surface of the TPS. Thus, if a picture is taken from the surface of the TPS, we can easily use Image Processing Algorithms and Deep Learning to identify the corresponding area of the TPS surface based on the patterns present there. Thus, optical images are taken while the LiDAR scans the surface of the Spacecraft and once a damage is detected the Free-Flyer identifies the exact place in the TPS surface using optical images.

Finally, the Free-Flyer can navigate around the ship using the aforementioned patterns and radio trilateration. The Spacecraft will have a series of radio antennas, and the Free-Flyer calculates the intersection of the propagating signals spheres in order to estimate its position relative to the Spacecraft. We improve its accuracy using a Star-Tracker and sensor fusion.

Conclusion

Inspired by nature's unique visual patterns, we devise a highly feasible solution to the Free-Flyer's self-location problem around the Spacecraft, and more importantly, to autonomously identify the damaged regions of the TPS surface. Our approach uses linear and uniform trajectories to scan the Spacecraft, which was made to spin around its longitudinal axis. This allows for spending much less propellant when compared to other kinds of trajectories that require frequent propellant bursts. Finally, we successfully addressed all the items suggested for this challenge.

Finalizing our 30 seconds video for NASA: it is going to be Epic!

| # LICENSE: GPL V 3.0 | |

| # by MARCELO SERRANO ZANETTI - 28.October.2018 | |

| # TO ANIMATE CIRCLE DETECTION IN A 30 SECONDS VIDEO FOR NASA | |

| # LIBRARIES | |

| importnumpyasnp | |

| importcv2 | |

| importmath | |

| importrandomasrand | |

| importsys | |

| rand.seed(None) | |

| # PARAMETERS | |

| infile='match_original.mp4' | |

| bground='color' | |

| outfile='output_%s.avi'%bground | |

| width=1920 | |

| height=1080 | |

| tol=90 | |

| rep=10 | |

| green= (0,255,0) | |

| blue= (255,0,0) | |

| red= (0,0,255) | |

| white= (255,255,255) | |

| # CHECK COLLISION BETWEEN CIRCLES | |

| defcirclescollide(i,j): | |

| dx=int(j[0])-int(i[0]) | |

| dy=int(j[1])-int(i[1]) | |

| r1=int(j[2]) | |

| r2=int(i[2]) | |

| d=math.sqrt((dx*dx)+(dy*dy)) | |

| ifd<=(r1+r2): | |

| returnTrue | |

| else: | |

| returnFalse | |

| # AVERAGE PIXELS WITHIN A CIRCLE | |

| # MARK CIRCLES THAT ARE BELOW | |

| # THRESHOLD VALUE: DARK CIRCLES! | |

| defavcircle(x,y,r,tol,rep,frame,width,height): | |

| c0=0.0 | |

| c1=0.0 | |

| c2=0.0 | |

| n=0.0 | |

| foriinrange(0,rep): | |

| px=rand.randint(x-r,x+r) | |

| py=rand.randint(y-r,y+r) | |

| ifpx>=width: | |

| px=width | |

| ifpy>=height: | |

| py=height | |

| ifpx<1: | |

| px=1 | |

| ifpy<1: | |

| py=1 | |

| if (px-x)**2+(py-y)**2<=(r*r): | |

| n+=1.0 | |

| c0+=float(frame[py-1,px-1,0]) | |

| c1+=float(frame[py-1,px-1,1]) | |

| c2+=float(frame[py-1,px-1,2]) | |

| ifn>0andc0/n<=tolandc1/n<=tolandc2/n<=tol: | |

| returnTrue | |

| else: | |

| returnFalse | |

| # INPUT AND OUTPUT FILES | |

| cap=cv2.VideoCapture(infile) | |

| fourcc=cv2.VideoWriter_fourcc(*'XVID') | |

| out=cv2.VideoWriter(outfile,fourcc, 30.0, (width,height)) | |

| # FRAME COUNTER : PROGRESS | |

| property_id=int(cv2.CAP_PROP_FRAME_COUNT) | |

| frames=int(cv2.VideoCapture.get(cap, property_id)) | |

| frameCounter=0 | |

| # MAIN LOOP | |

| while(cap.isOpened()): | |

| ret, frame=cap.read() | |

| ifret==True: | |

| frameCounter+=1 | |

| gray=cv2.cvtColor(frame, cv2.COLOR_BGR2GRAY) | |

| frameg=cv2.cvtColor(cv2.cvtColor(frame, cv2.COLOR_BGR2GRAY), cv2.COLOR_GRAY2BGR) | |

| # DETECT CIRCLES | |

| circles=cv2.HoughCircles(gray,cv2.HOUGH_GRADIENT,1,20, param1=50,param2=30,minRadius=10,maxRadius=100) | |

| ifnotcircles.any(): | |

| print"NoneType" | |

| break | |

| # CONVERT PIXEL COORDINATES TO INT | |

| circles=np.uint32(np.around(circles)) | |

| # PROGRESS LIST | |

| l= [] | |

| done= {} | |

| # CHECK IT CIRCLE TO AVOID OVERLAP BETWEEN TWO OR MORE CIRCLES | |

| foriincircles[0,:]: | |

| li=str(i) | |

| # ONLY CONSIDER DARK CIRCLES | |

| ifnotavcircle(int(i[0]),int(i[1]), int(i[2]), tol, rep, frameg, width, height): | |

| done[li]=1 | |

| continue | |

| else: | |

| iflinotindone: | |

| inter=[] | |

| inter.append(( int(i[0]),int(i[1]),int(i[2]) )) | |

| done[li]=1 | |

| forjincircles[0,:]: | |

| lj=str(j) | |

| # ONLY CONSIDER DARK CIRCLES | |

| ifavcircle(int(j[0]),int(j[1]),int(j[2]), tol, rep, frameg, width, height): | |

| ifljnotindone: | |

| # MARK COLLISIONS | |

| ifcirclescollide(i,j): | |

| inter.append(( int(j[0]),int(j[1]),int(j[2]) )) | |

| done[lj]=1 | |

| else: | |

| done[lj]=1 | |

| # AVERAGE COLLISIONS | |

| mx=0 | |

| my=0 | |

| mr=0 | |

| n=len(inter) | |

| forjininter: | |

| mx+=j[0] | |

| my+=j[1] | |

| mr+=j[2] | |

| mx/=n | |

| my/=n | |

| mr/=n | |

| # CONSIDER THE AVERAGE CIRCLE ONLY | |

| l.append(( int(mx),int(my),int(mr))) | |

| # DRAW DETECTED CIRCLES | |

| ifbground=='color': | |

| finalFrame=frame | |

| elifbground=='gray': | |

| finalFrame=frameg | |

| foriinl: | |

| cv2.circle(finalFrame,(i[0],i[1]),i[2],green,5) | |

| forjinl: | |

| cv2.line(finalFrame,(i[0],i[1]),(j[0],j[1]),blue,1) | |

| # MONITOR PROGRESS | |

| progress= (100*frameCounter/float(frames)) | |

| # ANIMATING TEXT | |

| ifprogress>=40: | |

| text="MATCH" | |

| font=cv2.FONT_HERSHEY_SIMPLEX | |

| fontScale=5 | |

| fontColor=green | |

| lineType=4 | |

| thickness=15 | |

| size=cv2.getTextSize(text, font, fontScale, thickness) | |

| position= (int((width-size[0][0])/2),int((height-size[0][1]))) | |

| ifprogress%10<5: | |

| cv2.putText(finalFrame,text,position,font,fontScale,fontColor,thickness,lineType) | |

| sys.stdout.write("\r PROGRESS: %.2f %%"%progress) | |

| sys.stdout.flush() | |

| # WRITE FRAME TO OUTPUT FILE | |

| out.write(finalFrame) | |

| ifcv2.waitKey(1) &0xFF==ord('q'): | |

| break | |

| else: | |

| break | |

| # CLEANING UP | |

| cap.release() | |

| out.release() | |

| cv2.destroyAllWindows() |

SpaceApps is a NASA incubator innovation program.