Penteract | Design by Nature

Awards & Nominations

Penteract has received the following awards and nominations. Way to go!

The Challenge | Design by Nature

Damage-detection drone

In under two days we managed to come up with a drone that inspects spacecrafts for MMOD. Our drone is based on camera and sensor input and our drive is based on CO2 thrusters.

Introduction:

Space is a dangerous place. Going out of the spacecraft in order to inspect for damage from micro-meteoroid and orbital debris is even more dangerous. We don't want people risking their lives in order to verify the integrity of the spacecraft. Because of that we built our autonomous free-flyer.

General idea:

We plan to go around the spacecraft on a preprogrammed path that can be modified to fir out needs. In order to create movement we have 4 CO2 cartridges that are placed on rotating servos so that we can create acceleration on all axis.

Our project's main feature is a machine learning program that takes real time video input and scans it for real-life objects, creating polygons in the video’s frames, telling us if there is something wrong on the spaceship’s outer layers. In order to properly analyse camera input, we need to know were we are relative to the spacecraft. For that we need 2 things, position and orientation.

- Position: We detect our position relative to the spacecraft by placing RFID beacons on the spacecraft before the launch.

- Orientation: We verify our orientation relative to the spacecraft by using IR sensors. Using the distance from all of the 8 sensors to the spacecraft lets us detect unwanted tilt and fix it.

Hardware:

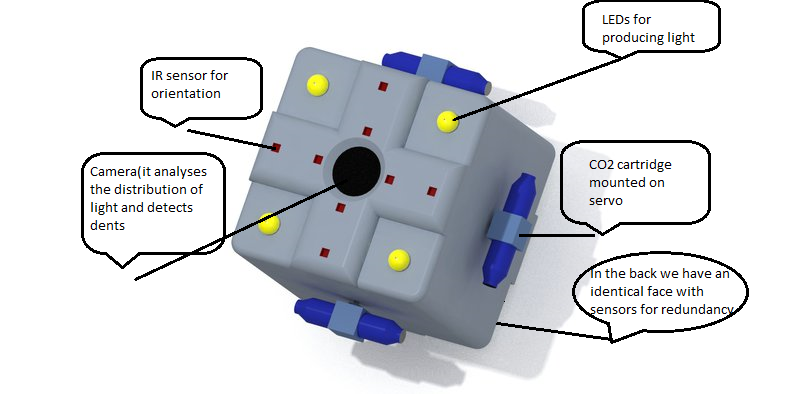

The free-flyer has the shape of a cube. We dedicated two opposite faces for sensors and camera. We chose to use two for redundancy purposes, so in the unlikely event that one sensor, the camera or a LED starts malfunctioning, we can rotate from the main face to the second one (more about that in the software chapter).

The remaining 4 faces of the cube make up the driving system. For each face, we thought of mounting the CO2 cartridge on a servo so that instead of covering movement on one axis we can cover movement on a plane. With all of the four cartridges it can generate forces on all axis. From the ideal gas law we know that p*V=n*R*T. Having that in mind we can conclude that temperature and the amount of substance have a dramatic impact on the pressure inside the cartridges. To fix that we thought of placing pressure sensors that report to the processing unit (more about that in the software chapter, again ;) ).

The camera must be built to withstand the conditions and should have a wide view.

LEDs are used to aid the camera.

Infrared sensors are used to determine the distance to the spacecraft and the orientation. We want to face the spacecraft directly. if there is any deviation we can calculate the angle offset by comparing the readings that we get from each IR sensor.

The outside of the free-flyer is plated with a reflective material for the purpose of protecting it from radiation.

Software:

Quick explanation of the code:

-->It takes real-time camera input and begins the processing of every frame.

-->Initializes multiple arrays of values, one of them which will be used for storing grayscale values of the image.

-->Then we analyze drastic differences in the grayscale values and draw the contours over them.

-->At last we output the edited frame into an image preview, and get back to square one, for processing another video frame.

The image processing is done very fast, but the camera preview transfer from the video input of the camera to the drawn-over video output of the app is sometimes slower(really depends on which device is used and it's specs).

Future updates will be shown in this app, including optimizing the video output of the detected damage, using ML(Machine Learning) to even tell us what type of damage has been done to our spacecraft.

The base idea is for us to send this autonomous drone out into space, let it surveil our spacecraft and once it finds some sort of damage, send us back a picture of the damage and the type of damage we are seeing there, including the depth(if it is a dent or a hole) or the temperature(if it is damage caused by passing high-temperature stars, like our Sun).

We have also thought of using angular momentum to keep our drone in the right orientation, but it means that we need to consume more power and we have to start and stop the rotors in order to make our drone follow the spacecraft and not fly into outer space without getting back.We will get back at this some other time, when we have more ideas that can make this possible.

Our returning system of the drone records the place it has been powered on(coordinates from the RFID beacons) and at the end of the surveillance or when asked to, it returns to those coordinates, thus making retrieving of the drone much easier.(This means that the drone must only be powered on at the place of launch)

Documentation:

https://www.nasa.gov/audience/foreducators/topnav/...

https://solarsystem.nasa.gov/basics/chapter3-2/

https://www.nasa.gov/press-release/our-solar-syste...

https://www.modernroboticsinc.com/sensors

Code snippets:

https://github.com/Rkoed97/OpenCV-SpaceApps

P.S.

At shutdown,you will find every data sent and received, recorded on an internal storage, meaning that when drones are lost into space or if spacecrafts are destroyed, we can find these drones and know exactly what happened, if they were sent out.

SpaceApps is a NASA incubator innovation program.